This is an article to demonstrate a quick step-by-step on having an SSIS package loop through a directory/folder of files in order to populate a database table. We could add each file as a separate connection manager but this is inefficient and not versatile enough to accommodate files that get added later.

Why?

I am creating an extract SSIS package intended to take a text file as its source and to populate a database table with this data. Note that this only works if all the text files to be used as source data have the same number of columns and where the column widths match.

How?

I've adapted my real working product with an example. As my work was for a Personnel/HR project, and data confidentiality is somewhat important, some of the images will be censored or data changed in the example below.

- Add a variable (preferably to the scope of the package) called "SourceExtractFile" and give it the data type "String". Set the Value to the full path and the first file including its extension (eg. "C:\Temp\SourceFiles\File00001.txt" - although I used a network share without any issues)

- Add a Connection Manager

- Right-click in "Connection Managers"

- Select "New Flat File Connection..."

- Browse to the first file in the folder to loop through and select it.

- Set the connection manager name, specify columns as per usual.

- OK the connection manager and display its "Properties"

- Under Expressions, click the ellipsis

- Under Property, select "ConnectionString"

- In Expression, type @[User::SourceExtractFile]

- OK to save it

- Add the ForEach Loop Container to your "Control Flow" (NB: This did not exist in my data flow ssis toolbox - if you do not see it when you are under the "Control Flow" design tab, then select Tools > Choose Toolbox Items > SSIS Control Flow Items > Tick the "For Each Loop Container")

- Add the Data-Flow to the container (or drag into the container if it already exists)

- Edit the ForEach Loop Container (or double-click on it)

- Under General, give it a reasonable name of your choice

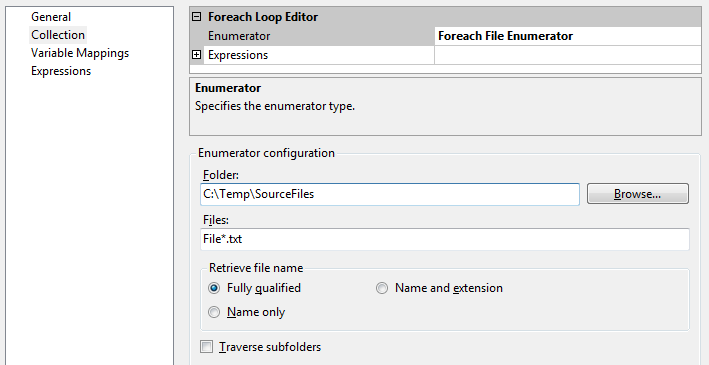

- Under Collection, select as Enumerator the "Foreach File Enumerator"

- Select the source folder which contains all the files to loop through

- Specify the file name convention with wildcard to indicate which files (in this example "File*.txt", all files starting with "File" - eg. "File0001.txt", "File0002.txt")

- For "Retrieve File Name" I put "Fully Qualified" (hoping this means the full path is used as just putting the file name did not work).

- Under "Variable Mappings" specify the Variable "User::SourceExtractFile" (based on this example) and keep the Index at 0 (zero).

- OK to close the dialog

- Edit your Data Flow as per usual, selecting your dynamic connection manager as the Flat File Source.

- Done.

Category: SQL Server Integration Services :: Article: 567

Add comment